The Ethics of Autonomous Driving: Navigating Responsibility in Accidents

The Ethics of Autonomous Driving: Who Is Responsible in Case of an Accident? explores the complex legal, moral, and technological questions surrounding liability when self-driving vehicles cause harm, impacting manufacturers, software developers, and the public.

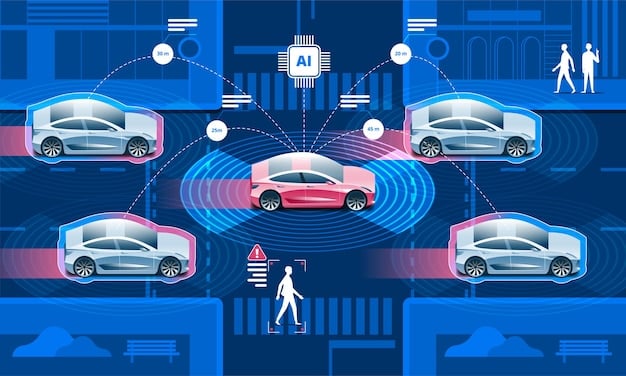

The rise of self-driving cars promises increased safety and convenience, but with great innovation comes great responsibility. When an accident occurs involving an autonomous vehicle, the question quickly arises: The Ethics of Autonomous Driving: Who Is Responsible in Case of an Accident?. This article delves into the multifaceted issues of liability, ethics, and the future of autonomous vehicle regulation.

Understanding the Levels of Autonomous Driving

Before assigning blame in an autonomous vehicle accident, it’s crucial to understand the various levels of automation. These levels dictate the degree to which the vehicle can operate independently and, consequently, where responsibility lies.

Defining Automation Levels

The Society of Automotive Engineers (SAE) has defined six levels of driving automation, ranging from 0 (no automation) to 5 (full automation). Understanding these levels provides a framework for assigning responsibility in accidents.

- Level 0: No Automation – The human driver controls all aspects of driving.

- Level 1: Driver Assistance – The vehicle offers assistance with steering or acceleration/deceleration (e.g., adaptive cruise control).

- Level 2: Partial Automation – The vehicle can control steering and acceleration/deceleration simultaneously under certain conditions, but the driver must remain attentive and ready to intervene.

- Level 3: Conditional Automation – The vehicle can perform all driving tasks under specific conditions, but the driver must be ready to take control when prompted.

- Level 4: High Automation – The vehicle can perform all driving tasks under certain conditions without driver intervention.

- Level 5: Full Automation – The vehicle can perform all driving tasks under all conditions.

The level of automation at the time of the accident is a key factor in determining responsibility. Higher levels of automation shift more responsibility away from the driver and potentially towards the vehicle manufacturer or technology provider.

Implications for Liability

The higher the level of automation, the more complex the liability questions become. Accidents involving Level 0 to Level 2 vehicles are generally handled similarly to traditional car accidents, with the driver typically held responsible. However, accidents involving Level 3 and higher vehicles introduce new layers of complexity, potentially involving the manufacturer, software provider, or even the vehicle’s AI system.

Understanding the levels of autonomous driving is essential for navigating the ethical and legal challenges that arise when accidents occur. This framework helps to clarify the roles and responsibilities of various parties involved, paving the way for fair and just resolutions.

Potential Liable Parties in Autonomous Vehicle Accidents

Determining who is responsible in an accident involving a self-driving car is a complex task. Several parties could potentially be held liable, depending on the circumstances of the accident and the level of automation involved.

The Vehicle Manufacturer

Manufacturers could be held liable if the accident was caused by a defect in the vehicle’s design or manufacturing. This could include faulty sensors, flawed algorithms, or inadequate testing.

Software and Technology Providers

Autonomous vehicles rely on complex software and AI systems. If an accident is caused by a software glitch, a programming error, or a failure in the AI’s decision-making process, the software provider could be held liable.

The “Driver” or Vehicle Occupant

Even in highly automated vehicles, the human occupant may still bear some responsibility, particularly if they were not paying attention to the road or failed to take control when prompted by the vehicle. However, the extent of their responsibility will depend on the level of automation and the circumstances of the accident.

Other Negligent Parties

In some cases, other parties may be responsible for the accident, such as negligent road maintenance crews, other drivers, or even hackers who compromised the vehicle’s systems.

Identifying all potential liable parties is a critical step in determining responsibility in autonomous vehicle accidents. This requires a thorough investigation of the accident, including a review of the vehicle’s data logs, sensor readings, and software code.

Ethical Frameworks for Autonomous Driving

Beyond legal considerations, ethical frameworks play a crucial role in guiding the development and deployment of autonomous vehicles. These frameworks help to address the difficult moral dilemmas that arise when self-driving cars must make split-second decisions in accident scenarios.

The Trolley Problem

The trolley problem is a classic thought experiment that highlights the ethical challenges of autonomous driving. It presents a scenario where a trolley is hurtling down a track towards a group of people. The only way to save them is to divert the trolley onto another track, where it will kill a single person. Should the trolley be diverted? This scenario forces us to confront the question of whether it is morally permissible to sacrifice one life to save others.

Utilitarianism

Utilitarianism suggests that the best course of action is the one that maximizes overall happiness or well-being. In the context of autonomous driving, this might mean programming vehicles to minimize the total number of injuries or fatalities, even if it means sacrificing the occupants of the vehicle.

Deontology

Deontology, on the other hand, emphasizes moral duties and rules. It might argue that it is always wrong to intentionally harm someone, even if it would save others. This could lead to programming vehicles to prioritize the safety of their occupants, regardless of the consequences for others.

Ethical frameworks provide a crucial foundation for designing and regulating autonomous vehicles. By considering these frameworks, we can ensure that self-driving cars are programmed to act in a way that is consistent with our moral values.

Legal Precedents and Challenges

As autonomous vehicle technology continues to evolve, legal precedents are slowly being established to address the unique challenges posed by self-driving car accidents. However, significant legal hurdles remain.

Product Liability Laws

Product liability laws hold manufacturers responsible for defects in their products that cause harm. These laws could be applied to autonomous vehicle accidents caused by faulty sensors, flawed algorithms, or inadequate testing.

Negligence Laws

Negligence laws hold individuals or entities responsible for failing to exercise reasonable care, resulting in harm to others. These laws could be applied to situations where a human driver failed to take control of an autonomous vehicle when prompted, or where a road maintenance crew failed to properly maintain roadways.

The Challenge of Causation

One of the biggest legal challenges in autonomous vehicle accidents is establishing causation. It can be difficult to prove that a specific defect or act of negligence directly caused the accident. This is particularly true in cases where the accident involved complex interactions between multiple factors, such as the vehicle’s sensors, software, and the actions of other drivers.

Legal precedents and challenges continue to shape the legal landscape surrounding autonomous vehicles. As more accidents occur and more cases are litigated, the legal framework will become clearer, providing guidance for manufacturers, regulators, and the public.

Insurance and Autonomous Vehicles

The rise of autonomous vehicles is forcing the insurance industry to adapt and develop new policies to address the unique risks associated with self-driving cars. Traditional auto insurance policies may not adequately cover accidents involving highly automated vehicles, requiring new approaches to risk assessment and coverage.

Shifting Liability

As responsibility shifts from human drivers to vehicle manufacturers and technology providers, insurance companies will need to adjust their underwriting and claims processes. This could involve developing new types of policies that cover product liability, software errors, and cyberattacks. It’s also possible that traditional auto insurance could become less necessary, with manufacturers assuming more responsibility for accidents involving their vehicles.

Data Collection and Analysis

Autonomous vehicles generate vast amounts of data about their driving behavior, sensor readings, and environmental conditions. Insurance companies can use this data to analyze accident patterns, identify high-risk areas, and develop more accurate risk models. This data can also be used to reconstruct accidents and determine the cause of the crash.

The Role of Regulation

Government regulation will play a critical role in shaping the insurance landscape for autonomous vehicles. Regulators will need to establish clear standards for safety, testing, and data privacy. They will also need to develop guidelines for insurance coverage and liability in the event of an accident.

Insurance and autonomous vehicles are deeply intertwined, each influencing the other. As autonomous technology advances, the insurance sector must evolve to provide appropriate coverage and promote safety.

The Future of Autonomous Vehicle Regulation

The regulatory landscape for autonomous vehicles is still evolving, with governments around the world grappling with how to best regulate this rapidly developing technology. Clear and consistent regulations are essential for ensuring the safety of autonomous vehicles, fostering innovation, and building public trust.

Federal vs. State Regulation

In the United States, there is ongoing debate about whether autonomous vehicles should be regulated at the federal or state level. Federal regulation could provide a more consistent and uniform set of rules, while state regulation could allow for greater flexibility to address local conditions and concerns. Some argue for a hybrid approach, with the federal government setting high-level safety standards and states having the flexibility to implement those standards in a way that makes sense for their specific needs.

Cybersecurity and Data Privacy

As autonomous vehicles become more connected, cybersecurity and data privacy are becoming increasingly important regulatory concerns. Regulations are needed to protect vehicles from hacking and unauthorized access, and to ensure that the data collected by autonomous vehicles is used responsibly and ethically.

Public Acceptance

Ultimately, the success of autonomous vehicles will depend on public acceptance. Regulations that promote safety, transparency, and accountability can help to build public trust and encourage widespread adoption of this technology.

The future of autonomous vehicle regulation is uncertain, but it is clear that governments must act to ensure that this technology is developed and deployed in a safe, responsible, and ethical manner.

| Key Point | Brief Description |

|---|---|

| 🤖 Automation Levels | Define the degree of vehicle independence and liability. |

| ⚖️ Liable Parties | Manufacturers, software providers, or the driver may be responsible. |

| 🚦 Ethical Frameworks | Utilitarianism and deontology guide moral decision-making in accidents. |

| 🛡️ Insurance Adaptations | New policies are needed to cover the unique risks of self-driving cars. |

Frequently Asked Questions

▼

Typically, from Level 3 and above, the responsibility starts to shift from the driver to the manufacturer or technology provider, assuming the system is functioning as intended.

▼

It’s a moral dilemma where the vehicle must choose between two unavoidable accident scenarios, forcing a decision on who to prioritize or sacrifice.

▼

If an accident is caused by a defect in the vehicle’s design or manufacturing, product liability laws can hold the manufacturer responsible for the resulting damages.

▼

Data collected by the vehicle is used to analyze accident patterns, identify risks, and reconstruct events, helping insurers assess liability and adjust premiums.

▼

Balancing innovation with safety, ensuring cybersecurity, addressing data privacy concerns, and building public trust are all crucial and challenging aspects of regulation.

Conclusion

The question of The Ethics of Autonomous Driving: Who Is Responsible in Case of an Accident? has no easy answer. Determining liability in autonomous vehicle accidents requires careful consideration of the level of automation, the potential liable parties, ethical frameworks, legal precedents, and insurance implications. As the technology continues to develop, regulations and legal precedents will evolve, hopefully providing more clarity and guidance for all stakeholders.